Master Data Management Program

December 2012

Contents

INTRODUCTION

What is Master Data?

If you Google for a definition for Master Data, you will get 11 million hits (at the time of this writing). For the purposes of defining our position on this we will start with this idea:

- “Master Data is the business critical data stored in disparate systems and spread across our Enterprise.”

That is, Master Data is the specific, unique data needed to support any function within our business system.

What Master Data isn't

As a general rule, Master Data is not the dynamic or transactional data used in our system. Data which represents financials, purchase order status, product pricing, etc. are either results of a specific transaction or a snapshot in time of a company measure. The dynamic nature of this data does not lend itself well to being actively managed, rather it is controlled through data quality designed into the transactional system.

Master Data Management

Is the set of processes, procedures and tools which keep Master Data unique and relevent. The ultimate goal is to have a single data set which is completely unique, correct and complete in every way. Additionally, any new additions through consolidation or acquisition is prepared and treated to these standards on migration. There are four general "Buckets" of Master Data which will be considered:

- Parties: all parties we conduct business with such as customers, prospects, individuals, suppliers, partners, etc.

- Places: physical places and their segmentations such as plant locations, sub-organizations, subsidiaries, entities, etc.

- Things: products, services, packages, items, financial services, etc.

- Financial and Organizational: all roll-up hierarchies used in many places for reporting and accounting purposes such as organization structures, sales territories, chart of accounts, cost centers, business units, profit centers, price lists, etc.

PROBLEMS TO ADDRESS

Every program designed into a business is put there to solve specific business system needs or issues, to remove waste and generate value. An MDM program is no different and so to get started this section will outline some of the critical business issues which exist as a result of not having a clear, defined data management program.

Existing business issues:

- Data Quality

- Item Master Record Integrity;

- Bill of Material Record Integrity;

- Customer Record Integrity;

- Supplier Record Integrity;

- Data Input Process Control;

- Ivoice Matching Price Sheets

- Data Consolidation

- Item visibility; commodity item usage and duplication by division or within Terex as a whole is not visible without an enormous amount of query and research work.

- Inventory Management; we are currently stocking identical items in multiple locations without the ability to cross reference alternative components.

- Standard Descriptions; without standardized descriptive attributes in product, customer and supplier data we spend a large amount of time searching and never really trusting our results.

- Item Cross Referencing; without the ability to cross reference items across Terex we do not know if an item already exists in the system thereby making it easier to create a new item then finding an existing one.

- Commodity costing; because we cannot see or understand how many or where we buy a specific component, we cannot use the power of volume to get the best cost across the company.

- Item Commonality; we do not govern commonality or re-use thereby driving inventory management and BOM maintenance costs higher.

THE AWP PLAN

With the implementation of the TMS (Oracle) business system in 2011/2012, Terex AWP has made fundamental changes in the way the business is managed, organized and reported. Because this implementation brings all AWP data into the larger Terex system, it is imperative steps are made to cleanse all legacy data and continuously improve the quality and reliability of the information within this new system.

The following will help define our AWP MDM maturity level and helps with development of an outline of an MDM Vision and Strategy for Terex AWP. The outline is also made with the understanding the program will be scalable to the larger business.

MDM GOVERNANCE MATURITY MODEL

This section is from an article which does a very good job of describing the phases, in terms of business maturity, of an MDM initiative. It is important to our understanding of our current state and this understanding needs to be brought into much of the planning and communication work around this high value initiative.

The original graphs and text can be found here:

The DataFlux Data Governance Maturity Model

(ARTICLE)

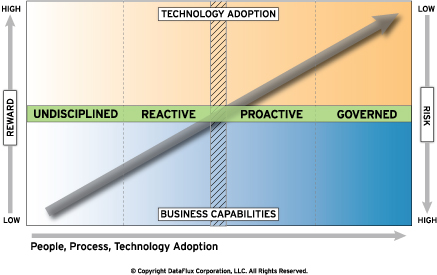

While achieving a single, unified enterprise view is an evolutionary process, an organization’s growth toward this ultimate goal invariably follows an understood and established path, marked by four distinct stages.

Companies that plan their evolution in a systematic fashion gain over those that are forced to change by external events. The Data Governance Maturity Model helps control that change by determining what stage is appropriate for the business – and how and when to move to the next stage. Each stage requires certain investments, both in internal resources and from third-party technology. However, the rewards from a data governance program escalate while risks decrease as the organization progresses through each stage.

|

|

Stage One – Undisciplined

At the initial stage of the Data Governance Maturity Model, an organization has few defined rules and policies regarding data quality and data integration. The same data may exist in multiple applications, and redundant data resides in different sources, formats and records. Companies in this stage have little or no executive-level insight into the costs of bad or poorly-integrated data.

Stage Two – Reactive

A reactive organization locates and confronts data-centric problems only after they occur. Enterprise resource planning (ERP) or customer relationship management (CRM) applications perform specific tasks, and organizations experience varied levels of data quality. While certain employees understand the importance of high-quality information, overall corporate management support – as well as a designated team for data management – is lacking.

Stage Three – Proactive

Reaching the proactive stage of the maturity model gives companies the ability to avoid risk and reduce uncertainty. At this stage, data goes from an undervalued commodity to an asset that can be used to help organizations make more informed decisions.

A proactive organization implements and uses customer or product master data management (MDM) solutions – taking a domain-specific approach to MDM efforts. The choice of customer or product data depends on the importance of each data set to the overall business. A retail or financial services company has obvious reasons to centralize customer data. Manufacturers or distributors would take product-centric approaches. Other companies may identify corporate assets, employees or production materials as a logical starting point for MDM.

Stage Four – Governed

At the governed stage, an organization has a unified data governance strategy throughout the enterprise. Data quality, data integration and data synchronization are integral parts of all business processes, and the organization is more agile and responsive due to the single, unified view of the enterprise.

At this stage, business process automation becomes a reality, and enterprise systems can work to meet the needs of employees, not vice versa. For example, a company that achieves this stage can focus on providing superior customer service, as they understand various facets of a customer’s interactions due to a single repository of all relevant information. Companies can also use an MDM repository to fuel other initiatives, such as refining the supply chain by using better product and inventory data to leverage buying power with the supplier network.

Applying the Data Governance Maturity Model

Any organization that wants to improve the quality of its data must understand that achieving the highest level of data management is an evolutionary process. A company that has created a disconnected network filled with poor-quality, disjointed data cannot expect to progress to the latter stages quickly. The infrastructure – both from an IT standpoint as well as from corporate leadership and data governance policies – is simply not in place to allow a company to move quickly from the undisciplined stage to the governed stage.

However, the Data Governance Maturity Model shows that data management issues – including data quality, data migrations, data integration and MDM – are not “all or nothing” efforts. For example, companies often assume that a solution that centralizes their customer data or product data is the panacea for their problematic data and that they should implement a new system immediately. But the lessons of large-scale ERP and CRM implementations, where a vast majority of implementations failed or underperformed, illustrate that the goals of data management cannot be met solely with technology. The root cause of failure is often a lack of support across all phases of the enterprise.

To improve the data health of the organization, organizations must adapt the culture to a data governance-focused approach – from how staff collects data to the technology that manages that information. Although this sounds daunting, the successes enjoyed by an organization in earlier stages can be reapplied on a larger scale as the organization matures. The result is an evolutionary approach to data governance that grows with the organization – and provides the best chance for a solid, enterprisewide data management initiative.

(END ARTICLE)

MDM VISION

An MDM vision defines the end state goals for a completed MDM program and includes but is not limited to:

- A governance and management body is in place managing business standards and process development company-wide

- Completed and continuously maintained library's of standard data models

- All data meets 6 dimensions of quality

Completeness Accuracy Conformity De-duplication Consistency Integrity

- Find all the data in one place in a simple user interface

- Be confident the data is accurate, up to date and complete

- Have standards and process controls driving data quality

- Have complete visibility of all data as a consolidated, unique data set

- Utilize data attribution to automate look-ups and drive standardized descriptive elements

- Utilize pokeyoke filtering on data input fields to mitigate errors

MDM STRATEGY

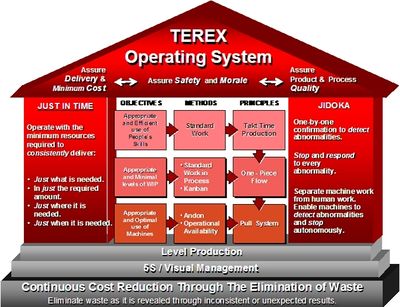

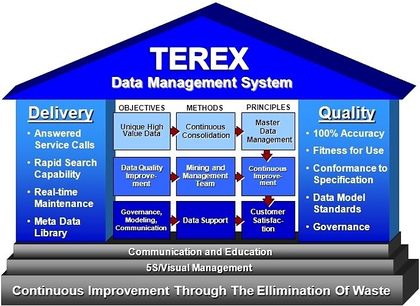

It is absolutely understood that all data management initiatives must be in alignment with our overall business objectives and to insure this relationship stays in place as the business goals change. This strategy is not going to detail how to implement a specific defined program or corporate initiative, it is rather an outline that will define achievable MDM goals with reasons and standards for the implementation. Additionally these initiatives must follow the Terex Operating System model using lean methods and decision event management.

|

|

| TOS House representing business processes | DQM House representing data management initiatives |

KEY PLAN INITIATIVES

- Data Input Quality Mitigation

- Evaluate all data inputs for format controls, data type filtering and error checking with validation. Where possible put permanent controls in place. Where not possible, put audit feedback systems to continuously monitor data quality as it enters the system.

- Data Model Creation and Maintenance

- Construct data models as defined by the governance committee to be used as the critical governing standards for all data types. Publish model definitions which identify data ownership, usage and format. Control all model documentation using change management protocols.

- Data Cleansing Process Development and Deployment

- Build a data mining and maintenance technical team (DMMT Team) to continuously look for and repair existing data in the system. Employ third party mining and cleansing tools to cleanse all data elements to data model standards. It is imperative to instill in this team clear lean principles including the application of Data 5S.

- Data Attribution and Cataloging Development

- Develop descriptive attributes for all data types and get consensus on attribute validity. Use the validated attributes to set up internal TMS parts catalogs for automating item identifiers (numbers) and standardized, consistent descriptions.

- Data Consolidation and Cross Referencing

- Consolidate all identical items and cross reference to a single unique item identifier. Build into the cross reference the ability to be bi-directional so that look-ups can find both the unique item number or all information on all numbers. This Consolidation and cross referencing is done on product, customer (e.g. addresses) and supplier data. The single unique reference becomes the business critical data hub for all business side transactions and reporting.

- Continuous Data Quality Improvement

- Utilize the Governance and DMMT teams to continuously create new methods and processes for data quality improvement. Communicate all work in this are to all relevant parts of the business and from this work define business process templates which may be used in other Terex companies.

WORK TO DATE

Tools Investigation

- Oracle SQL Developer

- Oracle SQL Developer is an integrated development environment that simplifies the development and management of Oracle Database. SQL Developer offers a worksheet for running queries and scripts and a reports interface.

- Datactics Flow Designer

- Datactics v5 may be used within multiple data domains from name and address data through to product data (whether item or catalog data) to financial data. It's a functional mining and management tool for both initial clean-up and maintenance.

- Dataload Pro

- DataLoad is powerful tool used by non-technical and technical users alike to load data and configuration in to ERP, CRM and operational systems such as Oracle E-Business Suite.

- iLink ePDM Integrator

- iLink ePDM integrator provides a bi-directional connection and simple user interface for managing all product data in the engineering ePDM system.

Oracle MDM Insight Program

- Product Data Analysis

- Part of the insight process included an analysis of our functional product data for completeness, correctness, compliance and consistency.

- Oracle Set-Up analysis

- The set-up analysis refers to how AWP built their instance of Oracle 11i and what we could do to improve dynamic data issues.

- Process Analysis

- The process analysis included a look into where we have redundant, complex or incomplete data issues as input problems which need to be mitigated.

- Business Case Development & Presentation

- The result of the Oracle Insight Program is a business case presentation which identifies in some detail where the identified improvements can be applied in our business and the direct cost savings for doing so.

- This is the 30 page presentation given to the leadership team by the Oracle Insight team. The final 100 slide presentation is available on request

IBM Discovery Workshop

- January Workshop Sample Questions Slide Deck

- Results - TBA